After reading this article you will:

#1: have a better understanding of the Streaming approach that can be used to integrate with Salesforce;

#2: see the pros and cons of this approach;

#3: know a little more about Apache Kafka;

#4: see how you can maintain your integration;

#5: see how streaming works in practice;

#6: have more food for thought ;)

Shall we begin?

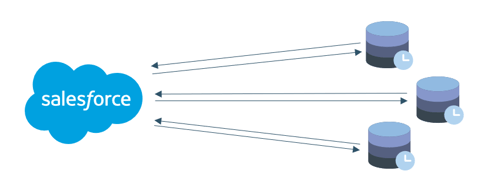

Streaming 101

With the Traditional integration approach, data are pulled from Salesforce by an external system. Any number of external systems can pull data from Salesforce, but each request is processed by Salesforce separately, utilizing system resources. In addition, data pulled from Salesforce represents the system state at a specific point in time, so there is no way to see the history of changes made to your object.

With the Streaming approach, data are pushed from Salesforce. Any number of external systems can subscribe to published data and that data can be adopted and transformed into the target system's needs beforehand. As an additional benefit of the Streaming approach, all the changes done to your objects are trackable in real time.

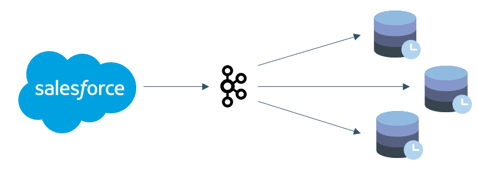

Message Broker

When using the Streaming Integration approach, it is important to choose the correct message broker - a type of middleware software that provides a standardized means of handling the flow of data between an application’s components.

We prefer to use Apache Kafka - a low latency distributed streaming platform. It allows messages to be collected until they are requested by external systems and ensures that messages are processed in the right sequence and do not get lost. Different consumers can process messages in parallel with almost no interference.

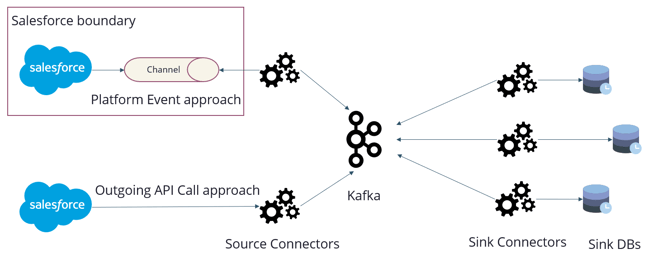

Salesforce Streaming options

There are several ways in which data from Salesforce reach Apache Kafka:

- Outgoing API Calls

- Streaming API

- Push Topic

- Change Data Capture

- Generic Events

- Platform Events

With the Outgoing API Call approach, Salesforce simultaneously calls the underlying source connector, which pushes the data into Apache Kafka.

With the Streaming API approach (you will see the Platform Event approach in a separate table), the source connector can subscribe to the Salesforce Channel, receive events, and forward them to Apache Kafka.

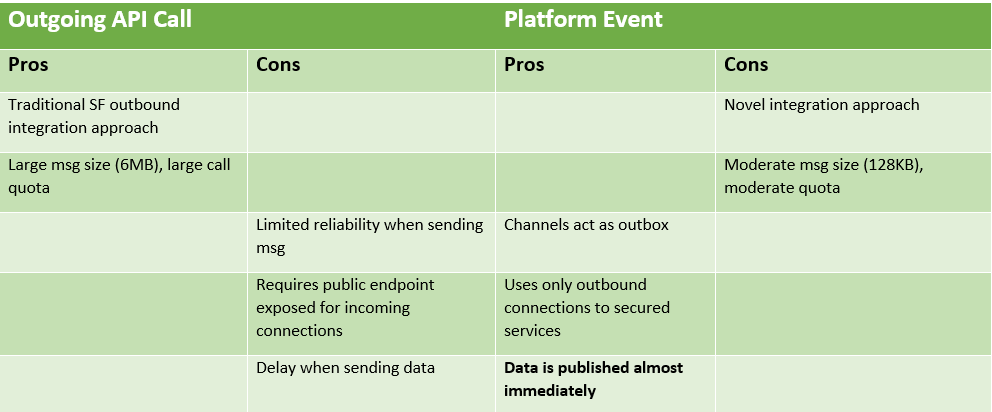

Both approaches have their pros and cons that you should consider before choosing the right one for you. Here are our thoughts:

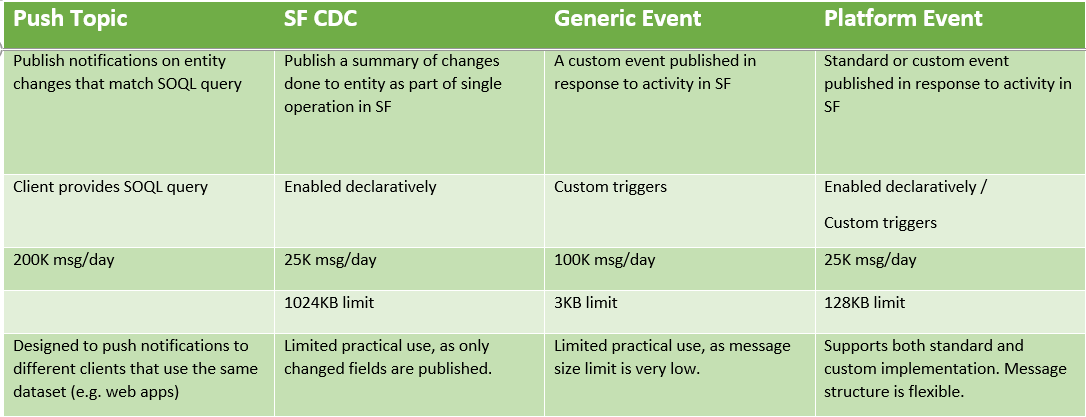

Salesforce Streaming API offers several options to apply; here is a concise comparison table of these options below:

Are you wondering which Streaming API option you should choose? It really depends on your use case and requirements. But henceforth we will stick to Platform Events, as we see it as the most generic and flexible approach.

Less theory, more practice

Ready to see how it works? In our scenario, we have 2 applications that allow users to create - read - update - delete customer information. Both applications - Salesforce and the Web app - need to stay up-to-date with the latest customer information.

In order not to overload Salesforce with simultaneous calls for CRUD operations, we decouple Salesforce and web application employing the Streaming approach and use Apache Kafka in the middle.

Here we can see how we have updated ‘Account's Billing Address’ in Salesforce and how it is automatically updated in the Web app. Then we update ‘Postal Code’ in the Web app, and after a page refresh in Salesforce we see that ‘Postal Code’ is updated too:

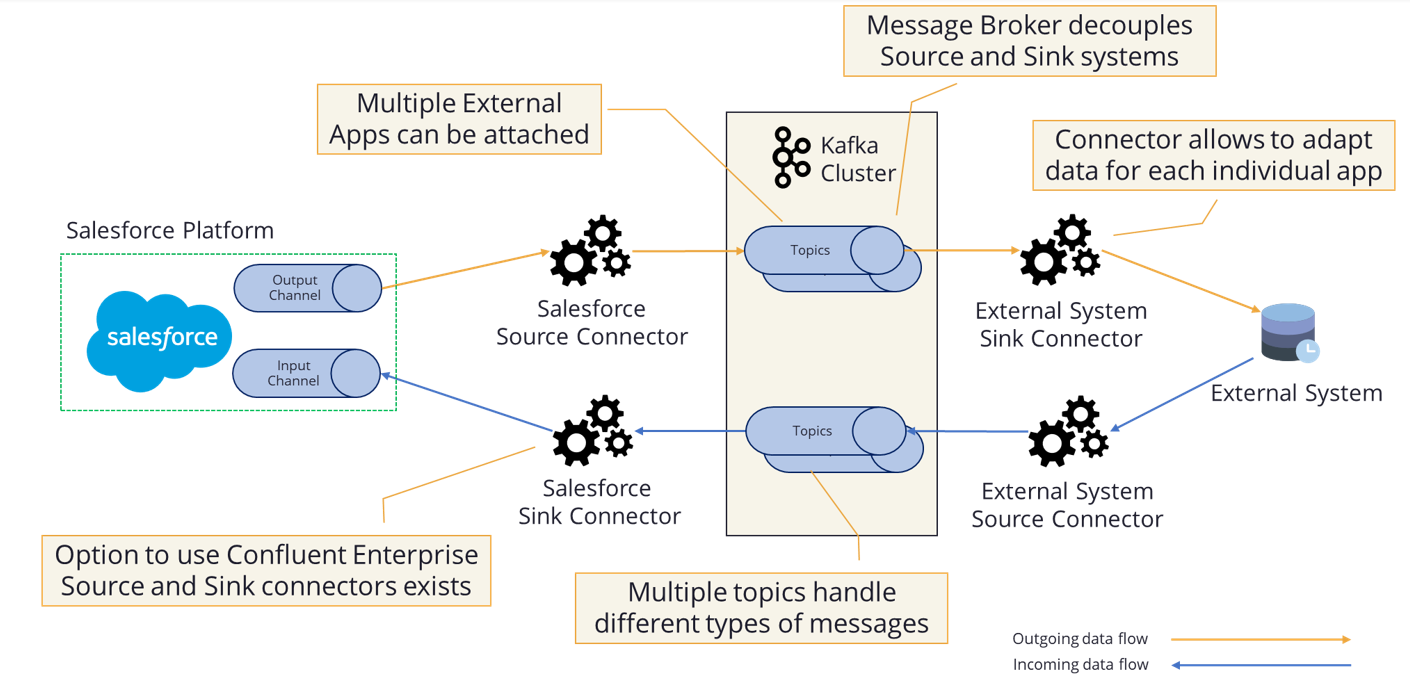

Below you can see a diagram of the demo and more technical details of how it was achieved:

- The Salesforce Source Connector is a .NET based container that listens for Platform Events in Salesforce and pushes them into Kafka.

- The External System Sink Connector is a .NET container, that transforms data from Salesforce into the model that is suitable for the web application and saves data into an external system database (we use CosmosDB).

- The Web Application is a Vue Application with NestJS (NodeJs framework) at its backend. All updates in the database are immediately shown in the Vue app with the help of Websockets.

When any change happens in the Web application, the External System Source Connector forwards these changes to Kafka, and the Salesforce Sink Connector pushes them into Salesforce. Thus, both of your systems are in perfect sync almost in real-time.

How about Maintenance?

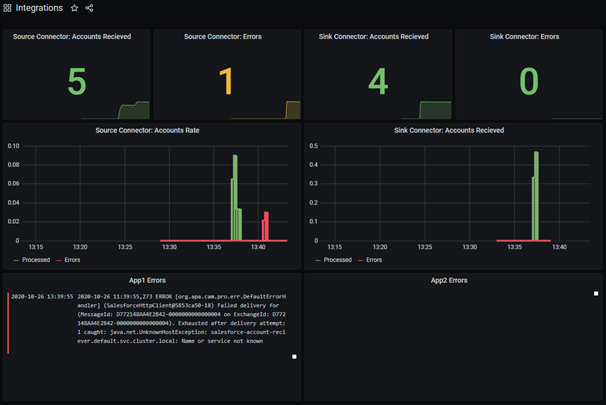

Any type of integration has its challenges; delivery and deployments, the monitoring of your integrations and traceability are a few of them. In our integration solutions we prefer to use Prometheus to collect metrics from integration components, collecting logs into Elasticsearch, and displaying statistics in Grafana. Of course, tech stack may vary, based on your needs, but we are building all of our integration components with a Twelve-Factor App methodology in mind; it is cloud-native by nature, and thus can be easily deployed in the cloud, on-premise, or on your Kubernetes cluster. Here is how we see what takes place with our integrations:

Conclusion

The most common question that we receive is: when should we consider using Streaming? We see two cases when the Streaming approach is predominant over the Traditional Integration approach:

#1: Salesforce as the primary system with a fleet of secondary follower-systems

In this case Streaming replaces the need for REST calls from secondary systems towards Salesforce, thus reducing load and improving the performance of your solutions. Data from Salesforce sync with your application's databases in the background, and, as a result, data are stored closer to your app and pre-formatted as per your app's demand.

#2: Two primary systems and Salesforce is one of them

Two-way streaming is applicable in this case and it allows you to cut down costs and facilitate migration from your older system towards Salesforce. During the time of transition, your current primary system will always be in perfect sync with Salesforce data. Yes, this approach brings several challenges, like, sync conflict resolution and error handling, but this can be handled with grace, based on Ideaport Riga experience.

As you will by now have seen, the Streaming approach has its pros and cons, but if your use case requires a high-performance solution or multi-master systems support, Streaming justifies all the challenges it introduces.

Final words

If you are planning to adopt Streaming, there are some additional considerations you should be aware of to make your solution sustainable and proficient in the long run:

- Using either two-way streaming, or using streaming for reading data, but performing add/modify operations through standard REST calls, with the CQRS (Command Query Responsibility Segregation) principle in mind.

- Transaction support and considerations about eventual consistency.

- Which message structure to choose: hierarchical or flat.

Ideaport Riga consultants can help you with the streaming adaptation - do not hesitate to contact us!